Video Calls

As the data consumption of video calls significantly increased since 2020 (citation needed), I always question when I make a video call - is this video data really needed? One of the features of NVIDIA Maxine is to reduce the bandwidth by using face tracking and recover the image from face landmarks. In other words, just like a 3D avatar, you animate an avatar of yourself. Or, as someone commented in the video, it is a real-time deepfake. While technically an interesting approach, I would alert that this is the same direction as the curation function of the social media, which attempts to show you what you want to see. This essay is not an attempt to find an answer to how videos should be in a call, but to list how I have been approaching to video calls in the last years.

During the lockdown in 2020, I collected screenshots every time I made a video call. One of the recurring call was called “Low Frequency Skies”, a daily lunch call organized by Raphaël de Courville. Around May 2020, we started playing with Snap Camera and OBS Studio to alter and mix live video footage. Snap Camera is an app from Snap, which is known for Snapchat. Within the ecosystem of Snapchat, users can install Snap Camera on a PC or a Mac and play with augmented reality filters on the webcam feed. It also acts as a virtual camera; thus, the modified video can be easily used as an input of video call software. OBS Studio is more generic tool for mixing videos. In the screenshot, I made a JavaScript sketch to track my face and paint it completely black. The result is captured by OBS and sent to the jitsi video call. OBS Studio is a versatile application; OBS itself has filters for color correction, and you can overlay texts and images, too.

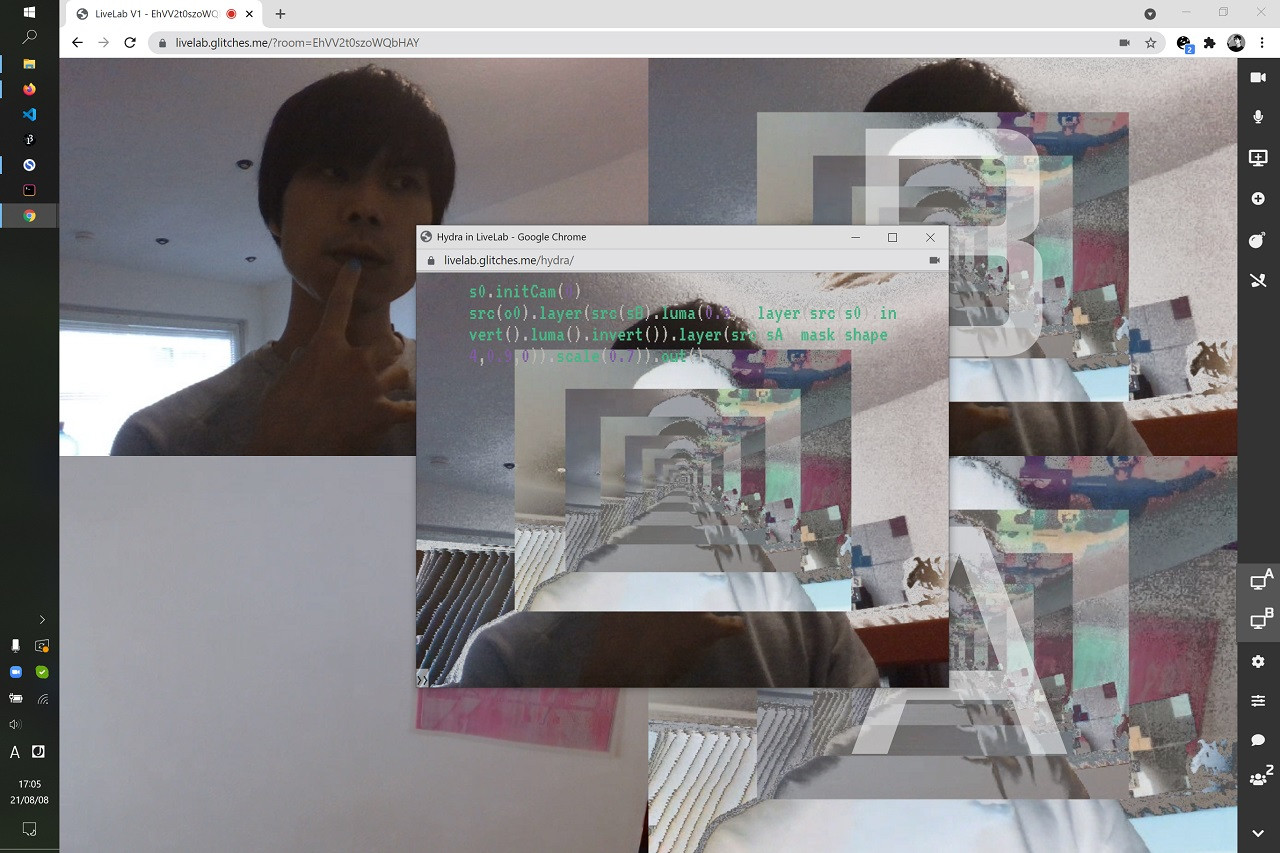

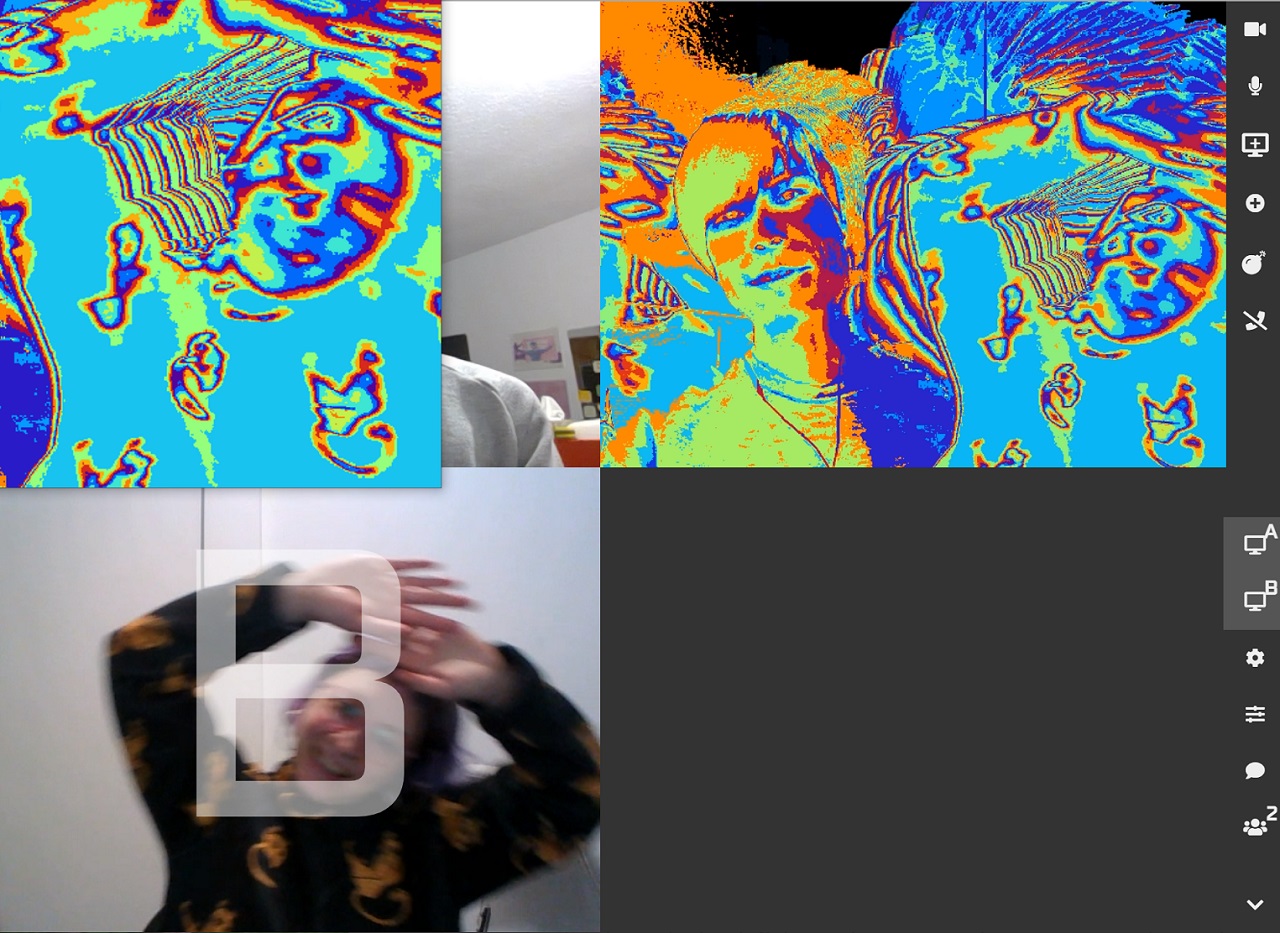

“Best Practices in Contemporary Dance” is an ongoing project with Jorge Guevara started in July 2020 to find a fluid discourse between dance and technology. Within a video call platform for performance (LiveLab developed by CultureHub), we modify, alter, corrupt and glitch ourselves as pixels using Hydra, which is a analog-synth like live-coding environment developed by Olivia Jack (who is also an author of LiveLab).

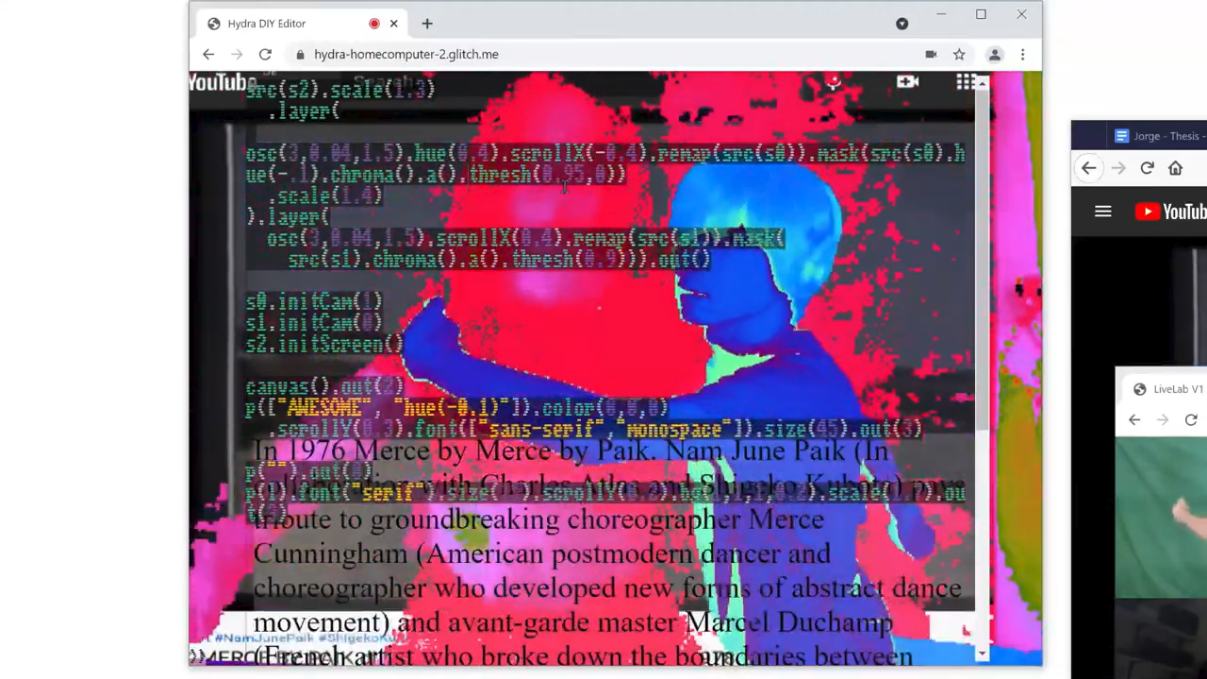

The project with Jorge inspired me to create a video call platform that has Hydra integrated so that, not only in a performance context, but also in a casual video call, we can alter our appearance by code. This is not just a playful feature, but it can be thought as another non-verbal channel for communication. Note that a similar project was done by Gilbert Sinnott (Hydritsi), which is based on Jitsi; however, I found that hosting Jitsi is not straightforward because of the setup and the required specs, and Hydritsi is already loaded with a few libraries (face tracking, p5.js and Hydra), which complicates the system and may not be beginner-friendly. I decided to integrate Hydra inside LiveLab (cover image and the image below). Currently there is no demo link, and there are some bugs. From the initial “testing”, Hydra inside LiveLab was an interesting experience that creates a sense of being in the same space while it is different from approaches like virtual reality or spatial video calls like workadventure.