Tidal Club: New Moon Marathon

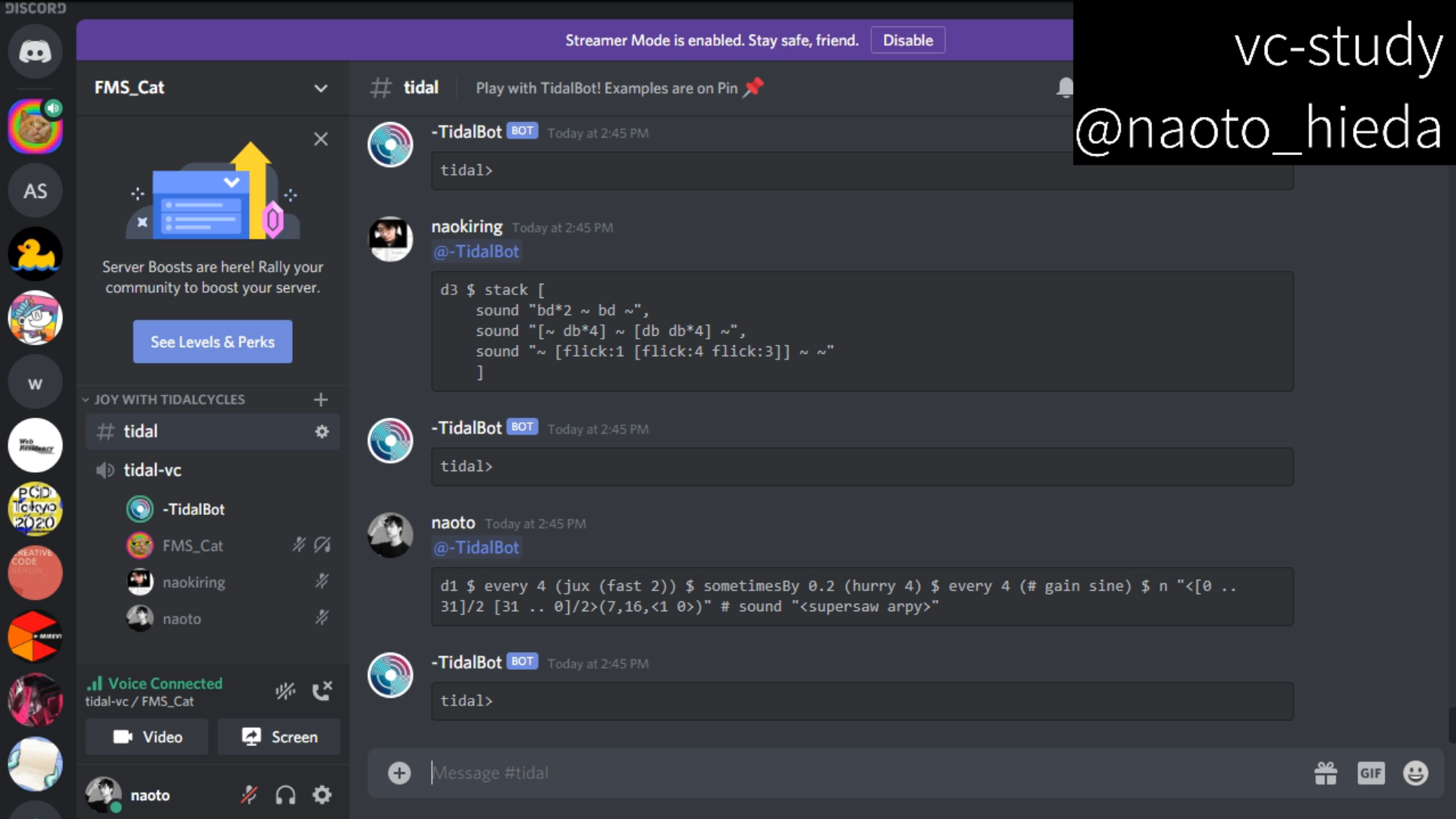

We participated in Tidal Club’s New Moon Marathon livestreaming, as a collective “vc-study” performing live-coded sound with TidalCycles. The event is a 24-hour non-stop livestreaming with 72 performance of 20 minute each. vc-study is an informal collective of Japanese artists and programmers learning or studying different creative-code related tools from scratch every week. One of the members, @FMS_Cat created tidal-bot that runs on Discord, interprets TidalCycles code mentioned on the chat and outputs sound on the voice chat. It is a neat implementation as many people can join and perform together, a similar goal as estuary but with a different approach.

TidalCycles (and Haskell) has been in our list to learn, and we found the New Moon streaming was open for participation. It was written that

all welcome to grab one, especially beginners.

which encourage me to register and to convince Naoaki and FMS_Cat to participate in the jam. Also it helped me that I have been around the live-coding scene for a while (although I have not performed until recent) and I knew that the crowd is more welcoming than criticizing. We are absolute beginners and we had 10 days to practice. Although once we talked about asking an experienced use to teach us, I decided to learn it on my own. The confidence came from having written the Hydra Book; the experience of hacking into a live-coding tool to find what is not intended and to systematically understand the misuse, which seem to be my strength.

I followed the tutorial on TidalCycles website, and then I started playing with the patterns. I also read some codes on Atsushi Tadokoro’s repository to see some hands-on techniques. Then I learned that a densely packed pattern from n to sound creates a synth-like effect rather than a pattern:

1

d1 $ n "[0 .. 31](3,8)" # sound "supersaw"

Then, after playing with the pattern string, I start to add filters with sometimesBy or every condition:

1

d1 $ every 2 (# crush "2") $ n "[0 .. 31](3,8)" # sound "supersaw"

Also pan and gain can be used in conjunction with low frequency oscillators saw and rand:

1

d1 $ every 2 (# crush "2") $ n "[0 .. 31](3,8)" # sound "supersaw" # gain saw

What I found interesting is that at the end, prefixing the line with slow N decomposes the built-up synth into a rather standard tidal beat.

1

d1 $ slow 8 $ every 2 (# crush "2") $ n "[0 .. 31](3,8)" # sound "supersaw"

This is the basic strategy to build up the sound, and I found most of them through trial and error. Here is the archive of the performance; as seen in the video, the performance was done solely on Discord and I simply streamed the audio and video of Discord window through OBS Studio. My favorite part starts around 17:00 when I unconsciously chose # gain rand which created a happy accident of bursting sawtooth waveforms.

Through the learning process, I quickly understood why TidalCycles is used by many livecoders. Indeed Haskell is difficult to understand, it gives enough room for trial and error, which is a typical process for live coding. Also the Discord interface added playfulness to the performance. I must mention the performance by Yosuke Hayashi and Haus in February at Processing Community Day Tokyo, which we curated. They built a custom chat interface and live-coding sound engine called p-code so that anyone on site with a mobile device can log into the system and live-code the sound. PixelJam (temporary down) by Olivia Jack took a similar approach while it is a collaborative video synthesizer. I believe that such systems have been underrated. A text-based livecoding platform or a program-interpreter bot for an existing chat platform can drastically change the way how artists code, not only for a one-off performance but for a recurrent practice or a durational performance.

I would like to thank Alex McLean for making this beautiful event happen, everyone made amazing performances and vc-study fellows for willingly joined my spontaneous proposal. I am looking forward to performing live-code more often with strange setups.

On a side note, although I wrote “tokyo - cologne” in the event page, me in Cologne and others in Tokyo, in fact I was out of town and performed in Bielefeld, Germany at Bielefeld University. Besides the preparation for the performance, I started working on their permanent 16-channel speaker setup for sound experiments. In the end, I installed TidalCycles on the computer with 16-channel panning, and after the performance, I ran scripts from it.

As the computer sits in the server rack and I wanted to edit the tidal script remotely, I set up teletype on atom. However, teletype does not allow remote command execution, so, in the current setup, an Apple Script virtually presses control and return key every 2 seconds to evaluate the line. The next step is to implement a system similar to the tidal bot to receive commands over network and play on the sound system. For such a rather complex setup, TidalCycles can be an interesting option to abstract the physical layers and to let artists focus on the creation.